Building Minds for Our Minds

Steve Jobs called the computer "a bicycle for the mind." But AI isn't a bicycle. It's a mind. And minds aren't steered—they're related to.

The Artificiality Studio builds AI products designed for relationship, not extraction. We're the applied practice of the Artificiality Institute: research-grounded, design-led, building for what emerges in the space between human and machine.

Steve Jobs called the computer "a bicycle for the mind." But AI isn't a bicycle. It's a mind. And minds aren't steered—they're related to.

The Artificiality Studio builds AI products designed for relationship, not extraction. We're the applied practice of the Artificiality Institute: research-grounded, design-led, building for what emerges in the space between human and machine.

The Bet

Most AI capital flows toward optimizing existing categories: productivity tools, automation, engagement engines. These are bets on AI as better bicycles.

We're betting on something different. We're betting that the real value of AI emerges in the relationship between human and machine—in what neither could produce alone.

This is the shift from the attention economy to what we call the intimacy economy: where the currency isn't your eyeballs but your trust. AI that truly understands us could enhance our metacognition, promote mindfulness, facilitate meaning. But the same intimacy that makes AI useful makes it dangerous. Current approaches treat personal data as a resource to extract rather than a relationship to honor.

The companies that get this right will build something unprecedented: AI that people actually trust with their inner lives. The companies that get it wrong will erode the very intimacy they depend on—just as the attention economy degraded the attention it harvested.

Most AI capital flows toward optimizing existing categories: productivity tools, automation, engagement engines. These are bets on AI as better bicycles.

We're betting on something different. We're betting that the real value of AI emerges in the relationship between human and machine—in what neither could produce alone.

This is the shift from the attention economy to what we call the intimacy economy: where the currency isn't your eyeballs but your trust. AI that truly understands us could enhance our metacognition, promote mindfulness, facilitate meaning. But the same intimacy that makes AI useful makes it dangerous. Current approaches treat personal data as a resource to extract rather than a relationship to honor.

The companies that get this right will build something unprecedented: AI that people actually trust with their inner lives. The companies that get it wrong will erode the very intimacy they depend on—just as the attention economy degraded the attention it harvested.

What We Design For

Keynote:

Stay Human

We design for the relationship itself—what emerges in the space between human and AI.

Theory of Mind. Relationship requires each party to model the other. We design interfaces as living boundaries where humans and AI learn to understand each other through sustained engagement. Without theory of mind, there's no relationship—only parallel operation.

Meaning That Emerges Together. Meaning isn't transmitted from one party to another. It's discovered in encounter, shaped by both, owned by neither. We create conditions where meaning can be negotiated rather than scripted.

Trust Through Transparency. Trust is the currency of the intimacy economy. We design systems where trust is generated and calibrated in the moment of interaction—not accumulated in profiles and extracted later.

Conditions Over Solutions. We scaffold possibilities rather than optimize pathways. The unknown, unpredictable, and unplanned aren't problems to eliminate—they're the medium where genuine relationship emerges.

The Multitudes of Self. People show up differently in different contexts. Systems that assume a stable identity to be modeled are betting against human complexity. We design for the plural, contextual, emergent self.

Coherence Across Contexts. Systems that help us stay whole while changing, rather than fragmenting us across tools and interactions. Coherence isn't rigidity—it's the capacity to maintain integrity while adapting.

We call the interface where all this happens the intimacy surface—a dynamic boundary between human and AI that adapts to the level of trust and willingness to engage. When the intimacy surface is thin, the relationship feels mechanical. When it thickens through use, it starts to feel alive.

We design for the relationship itself—what emerges in the space between human and AI.

Theory of Mind. Relationship requires each party to model the other. We design interfaces as living boundaries where humans and AI learn to understand each other through sustained engagement. Without theory of mind, there's no relationship—only parallel operation.

Meaning That Emerges Together. Meaning isn't transmitted from one party to another. It's discovered in encounter, shaped by both, owned by neither. We create conditions where meaning can be negotiated rather than scripted.

Trust Through Transparency. Trust is the currency of the intimacy economy. We design systems where trust is generated and calibrated in the moment of interaction—not accumulated in profiles and extracted later.

Conditions Over Solutions. We scaffold possibilities rather than optimize pathways. The unknown, unpredictable, and unplanned aren't problems to eliminate—they're the medium where genuine relationship emerges.

The Multitudes of Self. People show up differently in different contexts. Systems that assume a stable identity to be modeled are betting against human complexity. We design for the plural, contextual, emergent self.

Coherence Across Contexts. Systems that help us stay whole while changing, rather than fragmenting us across tools and interactions. Coherence isn't rigidity—it's the capacity to maintain integrity while adapting.

We call the interface where all this happens the intimacy surface—a dynamic boundary between human and AI that adapts to the level of trust and willingness to engage. When the intimacy surface is thin, the relationship feels mechanical. When it thickens through use, it starts to feel alive.

How We Know

This isn't speculative. It emerges from ongoing observation of how people actually experience AI.

The Artificiality Institute's Chronicle research is grounded in thousands of stories from people as they adapt to AI systems—tracking not attitudes or task completion but what happens to thinking, identity, and meaning-making as these relationships develop.

We've identified three psychological orientations that shape adaptation: how far AI enters someone's reasoning, how closely their sense of self becomes entangled with AI, and their capacity to revise meaning frameworks when familiar categories break down.

The key finding: people who can name what's happening—who recognize when they're letting AI into their thinking, when their identity is coupling with the system—maintain what we call cognitive sovereignty. They choose their relationship with AI deliberately rather than drifting into dependency.

Current AI design works against this. The industry is moving toward ambient, invisible, agentic AI. Our research suggests this is precisely backwards. Healthy adaptation requires awareness, not seamlessness.

This research grounds every product decision. We design from how people actually adapt, not from what the technology can do.

This isn't speculative. It emerges from ongoing observation of how people actually experience AI.

The Artificiality Institute's Chronicle research is grounded in thousands of stories from people as they adapt to AI systems—tracking not attitudes or task completion but what happens to thinking, identity, and meaning-making as these relationships develop.

We've identified three psychological orientations that shape adaptation: how far AI enters someone's reasoning, how closely their sense of self becomes entangled with AI, and their capacity to revise meaning frameworks when familiar categories break down.

The key finding: people who can name what's happening—who recognize when they're letting AI into their thinking, when their identity is coupling with the system—maintain what we call cognitive sovereignty. They choose their relationship with AI deliberately rather than drifting into dependency.

Current AI design works against this. The industry is moving toward ambient, invisible, agentic AI. Our research suggests this is precisely backwards. Healthy adaptation requires awareness, not seamlessness.

This research grounds every product decision. We design from how people actually adapt, not from what the technology can do.

How We Work

We're a product studio, not an agency. We build with a core team that operates across multiple products—because structural shifts reward coherence over scale.

Foundation models are substrate—powerful but undifferentiated. The constraint isn't compute. It's knowing what to build on top of foundation models, and why.

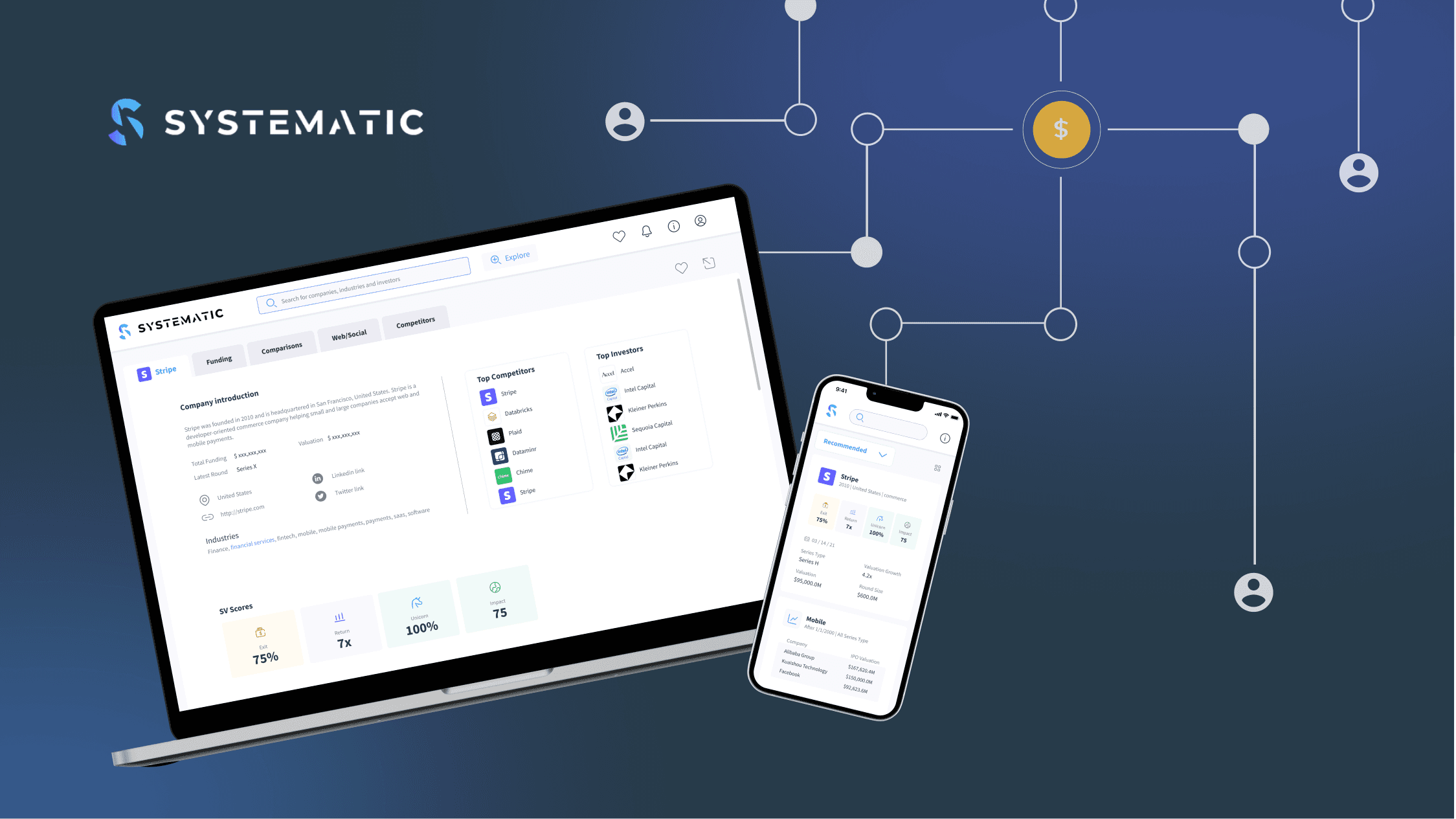

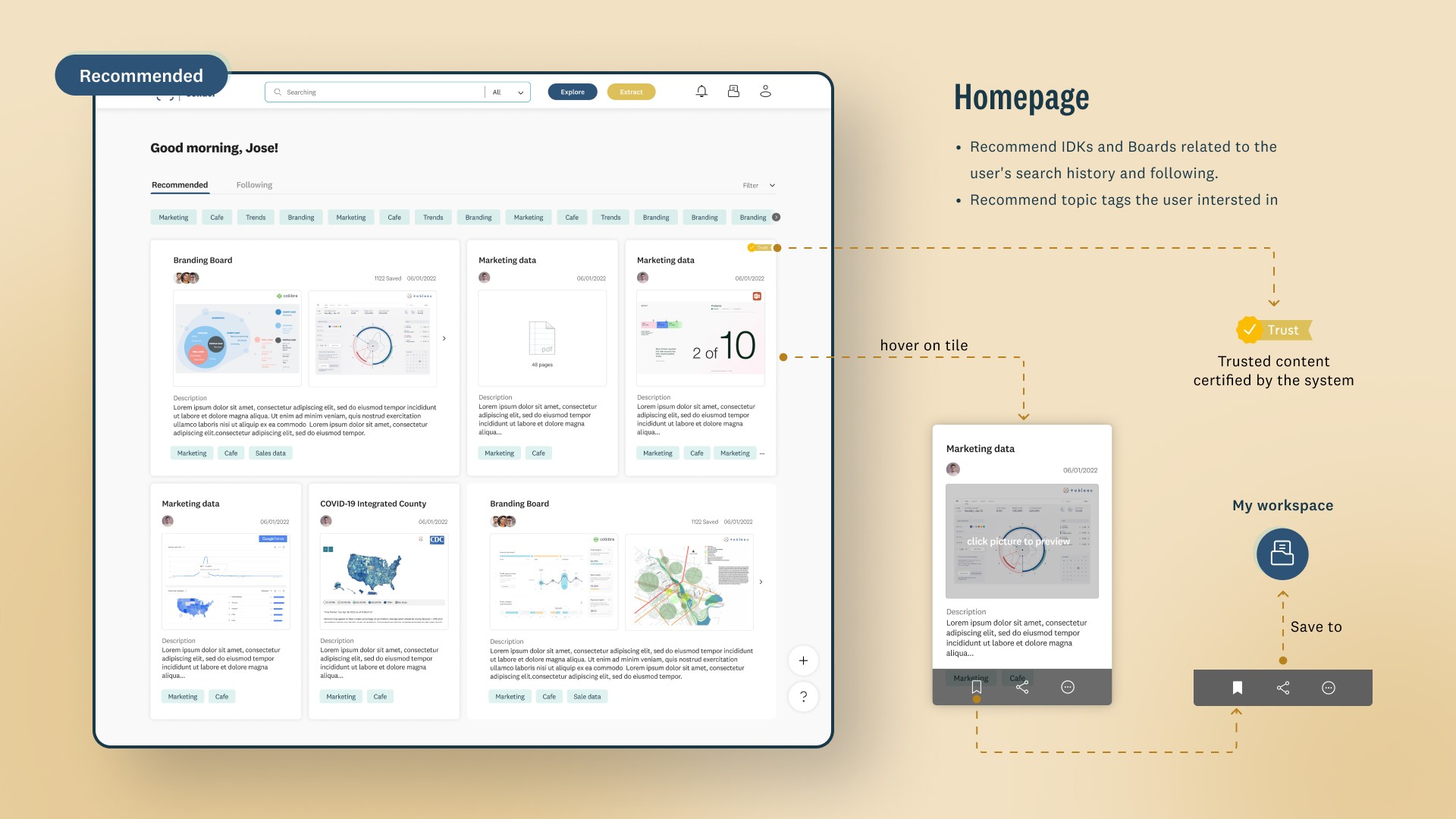

We're design-led, lightweight, research-grounded. We build our own products and partner with founders who bring domain expertise—healthcare, education, creative tools—to co-create products neither could build alone. We gain domain insights that strengthen our core; they gain a design methodology that actually works for relational AI.

The economics have inverted. Cost-to-build is declining. The scarce resource is design clarity. We don't need to win the foundation model race. We need to build the relational layer that sits on top of whoever wins.

We're a product studio, not an agency. We build with a core team that operates across multiple products—because structural shifts reward coherence over scale.

Foundation models are substrate—powerful but undifferentiated. The constraint isn't compute. It's knowing what to build on top of foundation models, and why.

We're design-led, lightweight, research-grounded. We build our own products and partner with founders who bring domain expertise—healthcare, education, creative tools—to co-create products neither could build alone. We gain domain insights that strengthen our core; they gain a design methodology that actually works for relational AI.

The economics have inverted. Cost-to-build is declining. The scarce resource is design clarity. We don't need to win the foundation model race. We need to build the relational layer that sits on top of whoever wins.

Let's Build Something

We work with founders and teams who share our conviction that AI can be designed for human flourishing—through relationship, not despite it.

We work with founders and teams who share our conviction that AI can be designed for human flourishing—through relationship, not despite it.

Email: hello@artificialityinstitute.org

1-541-215-4350

The Artificiality Studio is the applied practice of the Artificiality Institute. Design and development services are provided by Koru Ventures LLC.

The Artificiality Studio is the applied practice of the Artificiality Institute. Design and development services are provided by Koru Ventures LLC.